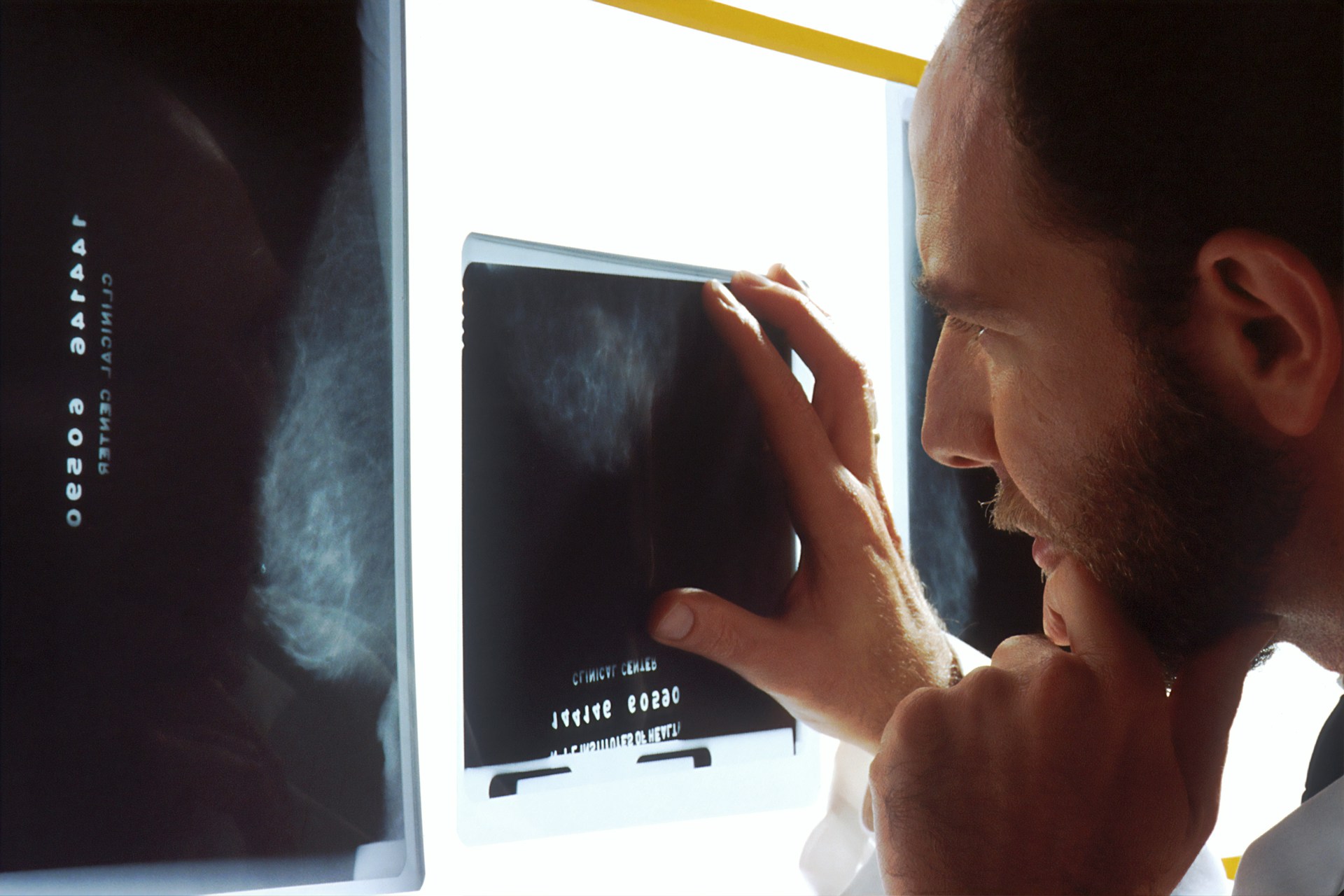

Although artificial intelligence is advancing rapidly, its applications in medical imaging have not yet met clinical expectations. Chat-GPT4 Vision is the first language model capable of interpreting text and images. It shows promising results in radiology but also has significant limitations, particularly in image interpretation.

Multimodal AI is designed to assist professionals.

A Rigorous Study to Test Chat-GPT-4 Vision

An American team gave Chat-GPT4 Vision 377 questions from diagnostic radiology training exams, nearly half of which were image-based. Overall, the model answered 65.3% of the questions correctly, but there was a significant disparity:

- 81.5% for text questions and

- and 47.8% for visual questions.

Textual comprehension is an undeniable strength.

When it comes to analyzing written information, Chat-GPT4 Vision performs very well. It can:

- -synthesize reports,

- popularize complex data,

- and assist with medical writing.

In other words, it can support radiologists in their documentation work.

Image reading: uneven performance

When it comes to images, performance takes a nosedive. While results are satisfactory in some specialties, such as thoracic radiology (up to 69%), Chat-GPT4 Vision performs poorly in nuclear medicine.

Causes:

- Limited visual analysis:

- Lack of specific medical training.

- Hallucinations (plausible yet false errors).

Hallucinations pose a significant clinical risk.

Some incorrect answers are given with confidence. The danger lies in false but convincing interpretations that are difficult for a non-specialist to detect.

This underscores the fact that radiological interpretation remains a contextualized medical skill that is difficult to replicate exactly.

The Role of Prompts: A Limited Performance Lever

Researchers tested different types of wording to see if prompting would improve the results.

- Simple prompts:

- Step-by-step reasoning (“chain of thought”),

- long formulation.

The result was a slight improvement only on text questions. There was no noticeable improvement on images. Even worse, Chat-GPT-4 Vision refused to answer over 120 questions, most of which included images.

It has real potential, but use with caution.

Positive points:

- Good command of textual content.

- Potential use as a teaching tool or documentary aid.

Current limitations:

- Unstable image reading

- Risk of serious errors.

- Frequent response refusals.

What prospects are there for a trusted radiology AI?

To make progress, we need to:

- Integrate specialized radiology databases.

- Train the AI with validated clinical cases.

- establish safeguards for use.

AI development in radiology is a path to the future that requires rigor, ethics, and scientific validation.

In conclusion

Chat-GPT4 Vision does not replace radiologists. It can assist, document, and explain, but it cannot see. Its reliability in medical imaging remains to be seen. For now, caution is advised.